The $1 offline browser based AI

For a single $1 payment, you get:

- True privacy—everything runs locally in your browser

- No monthly fees, ever

- No data collection or cloud dependency

- Pre-optimized models that work immediately

- Ongoing updates and support

Main features that make the difference

Discover the core features that power your experience. From beautifully formatted responses to real-time memory monitoring, these are the main features that set us apart.

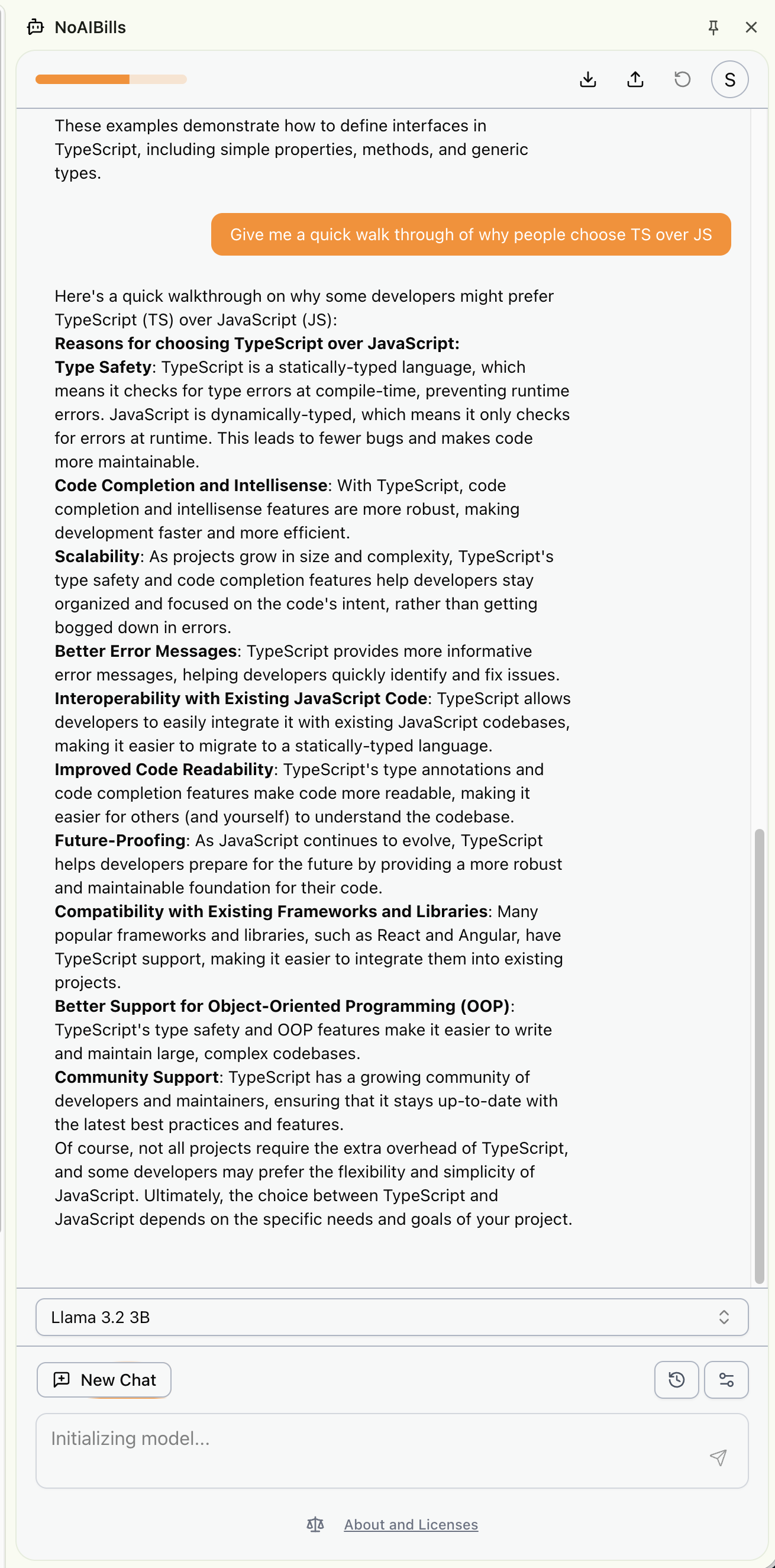

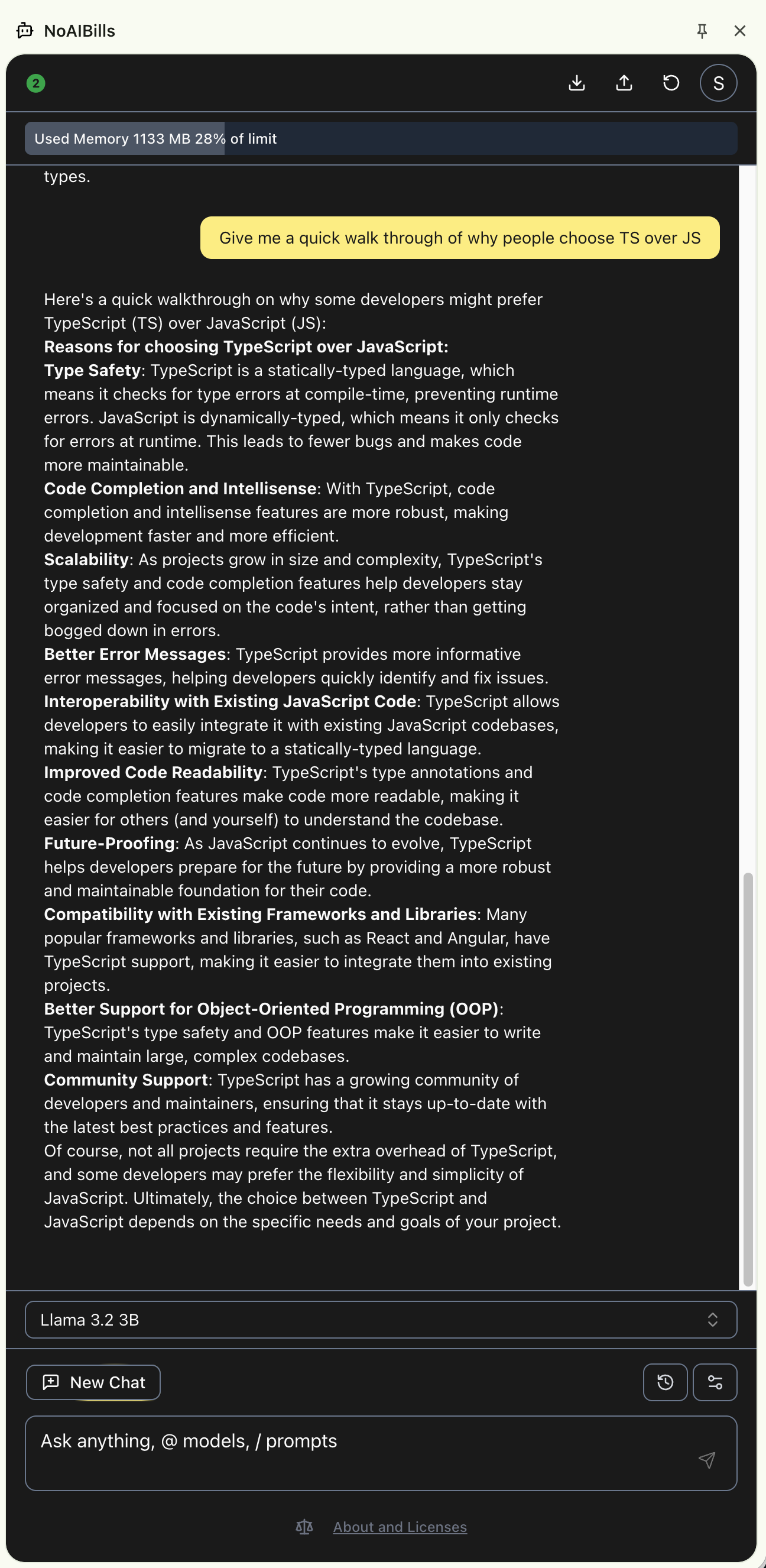

Your favorite Markdown responses

Experience beautifully formatted Markdown responses in both light and dark themes. Get clear, readable content that adapts to your preferences.

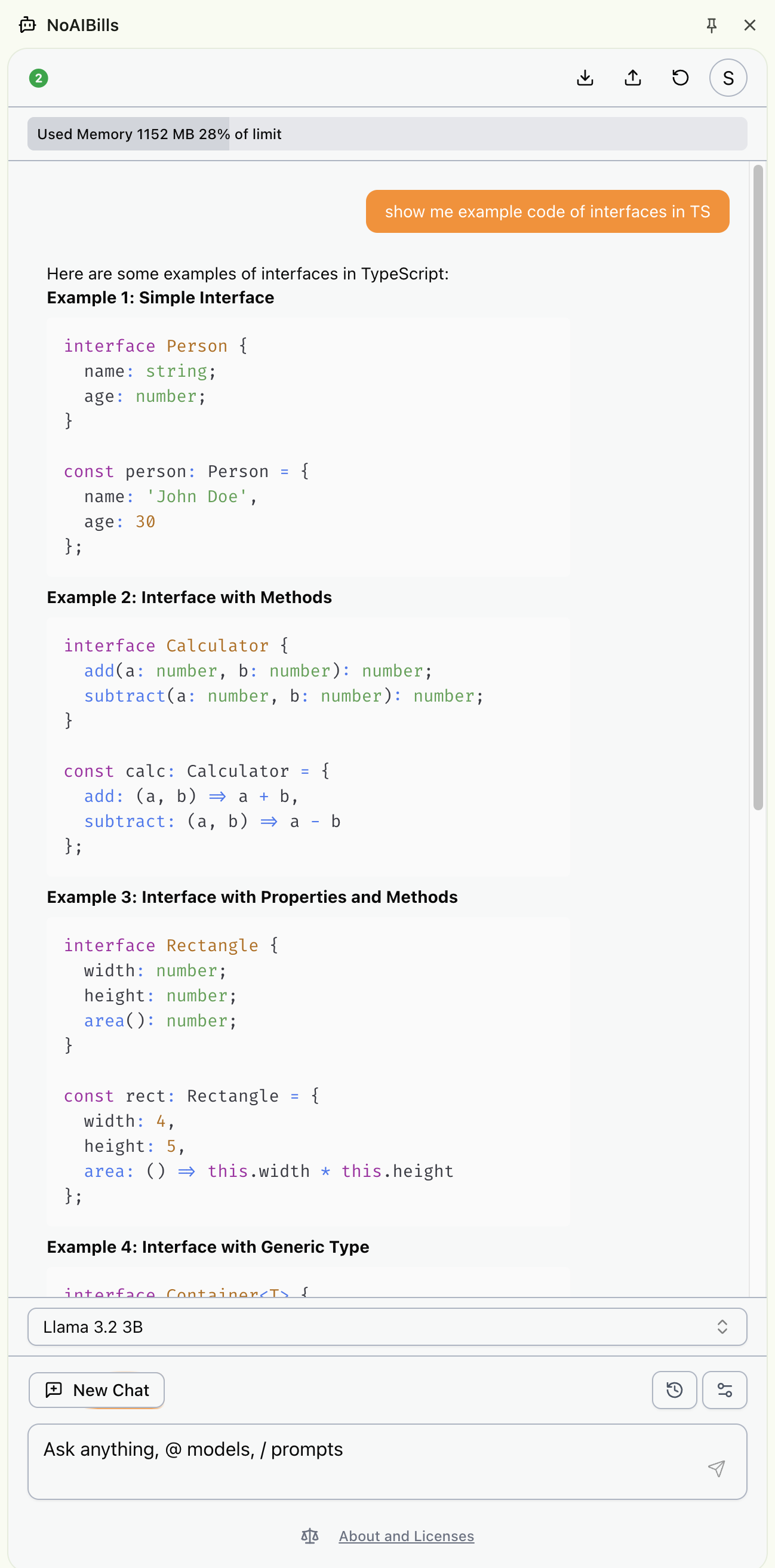

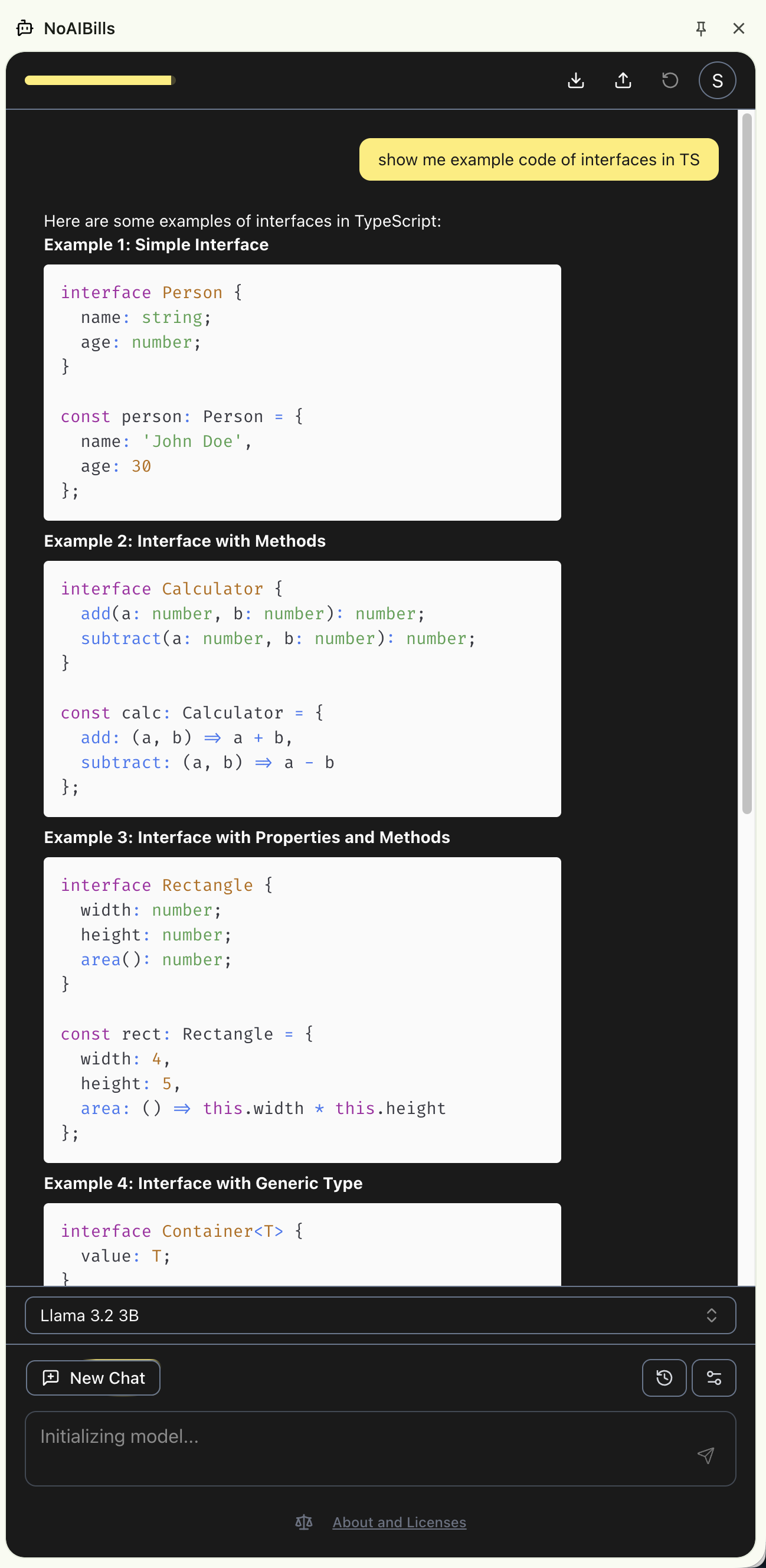

Beautiful code syntax highlighting

View your code with stunning syntax highlighting in both light and dark themes. Get clear, readable code that's easy on the eyes.

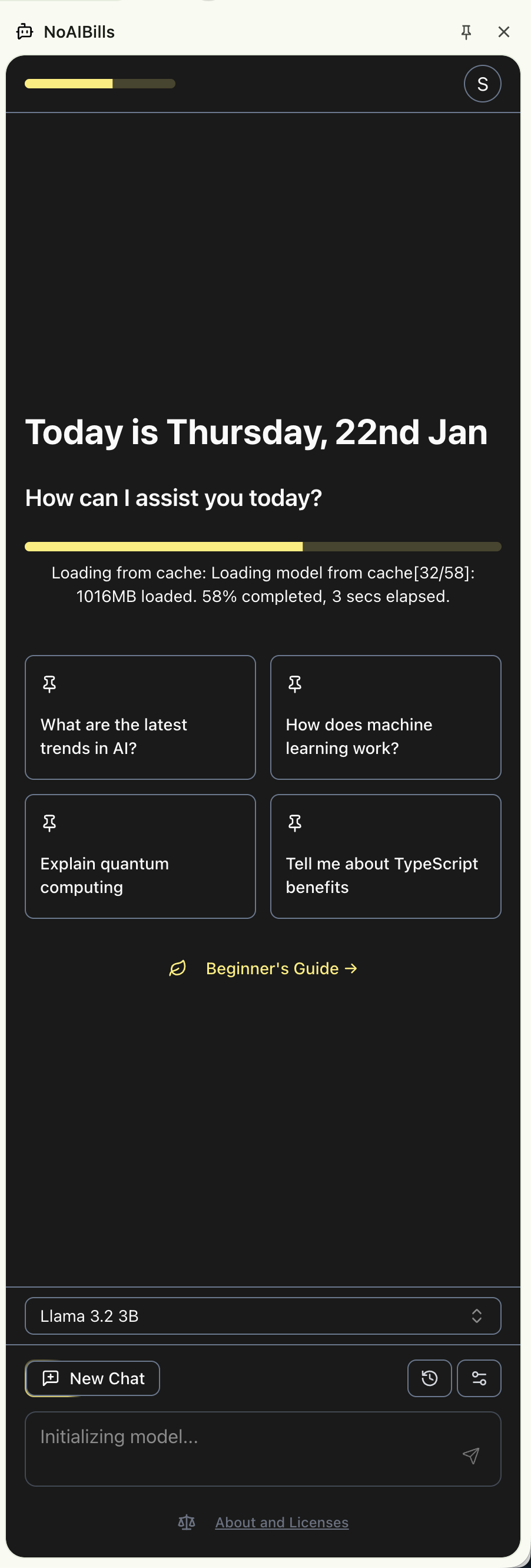

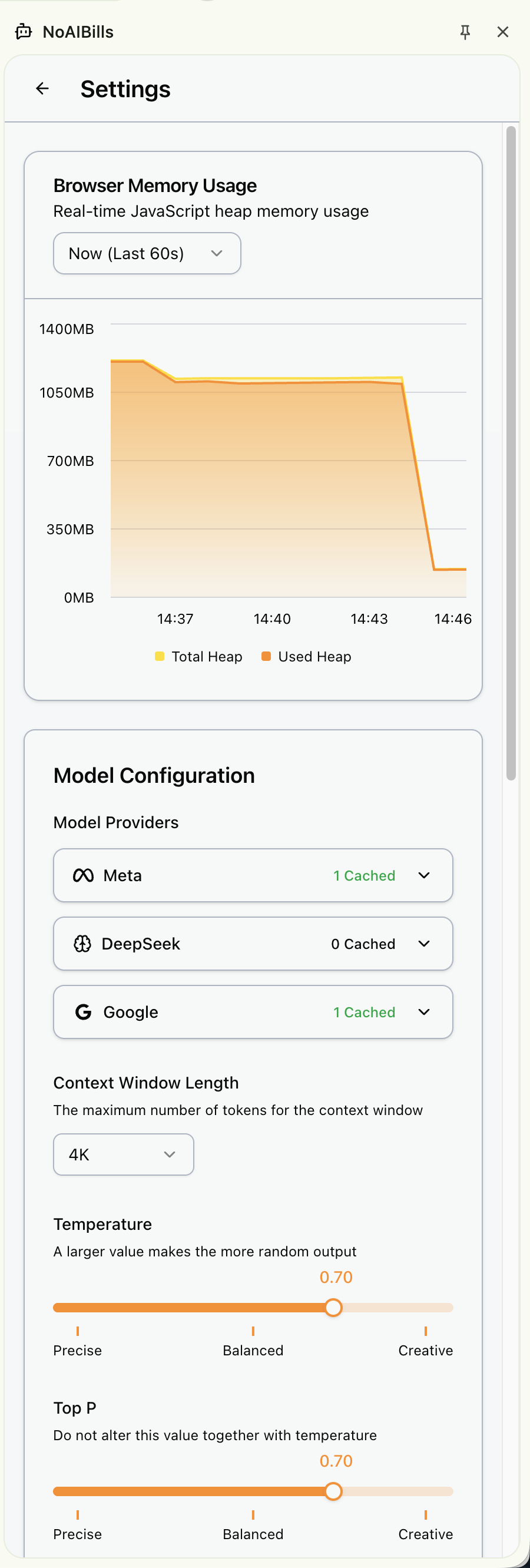

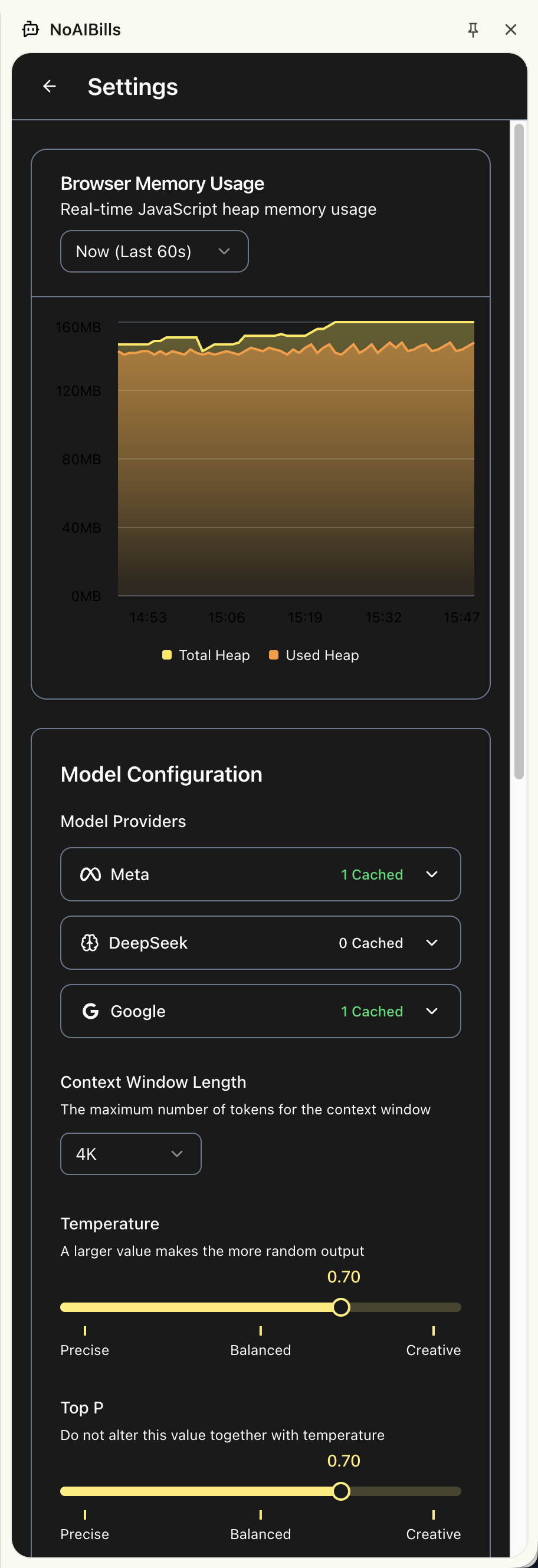

Real-time memory monitoring

Running LLMs on browser's WEBGPU is memory intensive. Get real-time visibility into memory usage, take preventive actions, and clear unused model weights from cache before it's too late.

What's under the hood?

Built on open-source foundations. This extension is a wrapper around powerful open-source projects that enable AI to run entirely in your browser.

Tech Stack

-

MLC LLM

Machine Learning Compilation for Large Language Models - the core engine that enables running LLMs directly in your browser using WebGPU. Licensed under Apache License 2.0.

-

React

The user interface is built with React, providing a responsive and interactive experience. Licensed under MIT License.

-

ShadCN UI

Beautiful, accessible component library that powers the extension's interface. Licensed under MIT License.

-

Lucide Icons

Icon library used throughout the extension for a consistent visual language. Licensed under ISC License.

Supported AI Models

-

Llama 3.2

Meta's Llama 3.2 models including Llama 3.2 1B, 3B, and variants. Licensed under Llama 3.2 Community License Agreement.

-

Phi Models

Microsoft's Phi family including Phi 3, Phi 2, and Phi 1.5 - efficient models optimized for performance.

-

Gemma 2

Google's Gemma 2B model, designed for efficient inference. Licensed under Gemma Terms of Use.

-

Mistral 7B

Mistral AI's 7B model and variants including Hermes-2-Pro-Mistral-7B, NeuralHermes-2.5-Mistral-7B, and OpenHermes-2.5-Mistral-7B.

-

Qwen2

Alibaba's Qwen2 models in 0.5B, 1.5B, and 7B sizes, providing multilingual capabilities.

-

DeepSeek-R1

DeepSeek-R1-Distill-Qwen-7B model for advanced reasoning capabilities. Licensed under MIT License.

A portion of proceeds supports the open-source projects that power this extension, helping to ensure their continued development and improvement.

Everyone is changing their life with Pocket.

Thousands of people have doubled their net-worth in the last 30 days.

It really works.

I downloaded Pocket today and turned $5000 into $25,000 in half an hour.

You need this app.

I didn't understand the stock market at all before Pocket. I still don't, but at least I'm rich now.

This shouldn't be legal.

Pocket makes it so easy to win big in the stock market that I can't believe it's actually legal.

Screw financial advisors.

I barely made any money investing in mutual funds. With Pocket, I'm doubling my net-worth every single month.

I love it!

I started providing insider information myself and now I get new insider tips every 5 minutes.

Too good to be true.

I was making money so fast with Pocket that it felt like a scam. But I sold my shares and withdrew the money and it's really there.

Unlock Premium Features!

Get lifetime access to all premium features

What's included?

No limits on tokens

Generate unlimited responses without token restrictions

No usage limits on below models

Access to Llama (3, 2, Hermes-2-Pro), Phi (3, 2, 1.5), Gemma-2B, Mistral-7B variants, and Qwen2 (0.5B, 1.5B, 7B) model families

Lifetime worth of upgrades

Get all future updates and improvements at no extra cost

All messages stored locally

All conversations are stored securely in your browser

No remote API calls

All open-source models run completely offline

Fully private and secure

Your interactions remain completely private and secure

Product Roadmap

Create / use from Prompt Gallery

Access a library of pre-built prompts and create your own

Access to prompt gallery and prompt editor

Browse and edit prompts with an intuitive interface

Create system prompts

Customize system prompts for personalized AI behavior

Access to browser tabs content

Integrate content from your browser tabs into conversations

Access to new models

Get access to new models as they become available on WebLLM and HuggingFace

Structured JSON generation

Generate structured JSON outputs with schema validation and JSON mode support

Ollama Integration

Connect to local Ollama instances to run larger models on your machine

Frequently asked questions

-

-

How does the extension run AI models in my browser?

The extension uses WebGPU, a modern browser API that provides direct access to your GPU hardware. Combined with MLC LLM (Machine Learning Compilation), it compiles and runs AI models entirely locally in your browser without any cloud connections.

-

Do I need an internet connection to use the AI?

Once models are downloaded and cached, you can use the extension completely offline. The initial model download requires internet, but all AI inference happens locally on your device using your GPU.

-

Is my data private and secure?

Absolutely. All conversations and data stay on your device. Nothing is sent to cloud servers or third parties. The extension runs entirely in your browser, giving you complete privacy and control over your data.

-

-

-

What browsers are supported?

The extension works on Chromium-based browsers (Chrome, Edge, Brave, etc.) that support WebGPU. WebGPU is available in Chrome 113+, Edge 113+, and other Chromium browsers. Firefox and Safari support is coming as WebGPU becomes more widely available.

-

How much storage space do models require?

Model sizes vary. Smaller models like Llama-3.2-1B require around 1-2GB, while larger models like Llama-3.2-3B need 3-4GB. The extension manages model storage efficiently and allows you to download only the models you need.

-

Can I use multiple models at once?

You can download multiple models, but only one model runs at a time. You can switch between downloaded models easily. The free version includes Llama-3.2-3B, DeepSeek-R1-Distill-Qwen-7B, and Gemma-2-2b.

-

-

-

What's the difference between free and paid versions?

The free version supports one chat thread with up to 20 messages and includes 3 models. The paid version ($1 one-time) removes all limits, gives access to all model families (Llama, Phi, Gemma, Mistral, Qwen2), unlimited messages, and lifetime updates.

-

How do I update the extension?

The extension updates automatically through the Chrome Web Store. When new models or features are added, you'll receive updates automatically. Paid users get lifetime access to all future updates at no additional cost.

-

Why do I need to pay if models are open-source?

While the underlying technologies (MLC LLM, WebGPU, and the AI models) are open-source, setting everything up is cumbersome for non-developers and non-technical users. Developers and technical users can wire things up themselves using these open-source technologies. We've packaged everything into a simple extension, optimized models for browser use, and provide ongoing support and updates. A portion of proceeds also supports the open-source projects we depend on.

-